‘Facts, Sir, Are Nothing Without Their Nuance.’

So said Norman Mailer, the Pulitzer Prize-winning author, testifying in the controversial trial of seven Americans arrested for protesting the Vietnam War (the “Chicago-7”), one of the United States most celebrated free-speech cases. To help us navigate the fraught path of delivering meaning from impartial information, we’ve created this Intermediary Liability Blog.

Context is King: How Correct Data Can Lead to False Conclusions

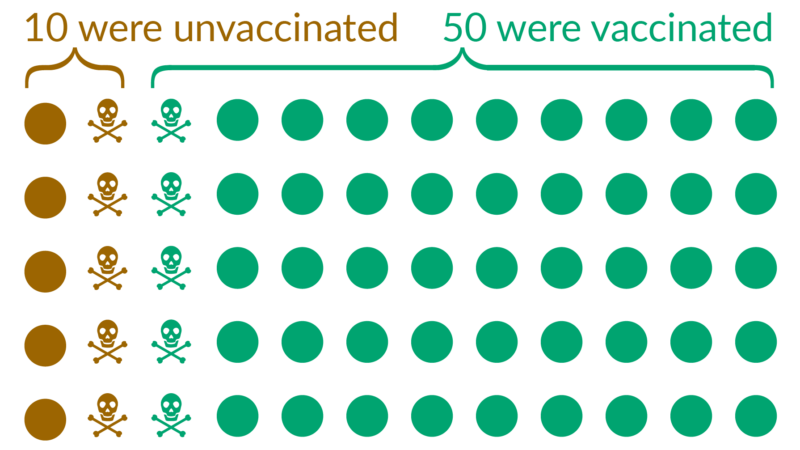

On 12 October 2021, in a now infamous Joe Rogan Podcast anti-vaxxer Alex Berenson said that “the vast majority of people in Britain who died in September were fully vaccinated,” offering this dubious fact to support his view that COVID-19 vaccines were as dangerous as the virus itself. The statement, while formally correct and based on official data from the United Kingdom National Health Service, was highly misleading: it missed the crucial context that the vast majority of the British population was vaccinated so of course the vast majority who died was vaccinated as well. In fact, the mortality rate of the unvaccinated population – seen in the right context – was several times higher than the mortality rate of vaccinated people. In the aftermath, many scientists and fact checkers moved to clarify this. Charts were published to unpack the issues involved and inform the public about the importance of contextual data in drawing the right conclusions. The whole story went down as an episode of misinformation and has now blown into a full-fledged crisis at Spotify Technology s.a., the company hosting and financing the podcast.

Source: Our World in Data

Of course, vaccination is a uniquely important topic, attracting high levels of attention, so the reaction was prompt and effective. But the majority of public-policy issues do not get such scrutiny and attention, and the use of out-of-context statistics is much less likely to be noticed or corrected.

One area where similar leaps to conclusion are taking place is the ongoing debate on the digital services act (DSA), an omnibus piece of European Union legislation making its way through the cumbersome EU decision-making process. Differing versions of the legislation are now before the European Parliament and the Council of the European Union (which represents the EU’s 27 member states) while technical experts negotiate a common text, which, once approved, will become law (the process is called “trilogue,” because the European Commission is part of the negotiation, too). Both versions seek to set new, tougher requirements for online platforms, including granting consumers the right to appeal algorithmic rankings and requiring some companies to disclose potentially sensitive data to non-profit organisations and journalists. And both versions seek to expand European Union powers in a crucial area: tougher reporting requirements and rules for goods being sold online.

But is the problem of illegal and counterfeit goods really as large as the solution proposed? Officials in France – the home of luxury goods makers Hermès International s.a., Kering, L’Oréal s.a. and LVMH Moët Hennessy Louis Vuitton – seem to think so. On 15 October 2021, almost at the same time as the Rogan podcast fiasco, the French ministry of economy, finance and recovery published Conformité des produits vendus en marketplaces, a timely report obviously intended to feed the ongoing DSA debate in Brussels. It stated, boldly, that 60% of the products it had sampled were illegal goods that did not comply with basic health or other product standards – and 32% were downright dangerous. But the context and close analysis of the underlying data showed a different story, one where the problem was perhaps not as systematic as a skewed look at the data would imply. The survey is based on merely 129 products across 10 marketplace and no information is provided on how the sample was selected. Amazon.com Inc., for one, offers 75 million products for sale every day. Is their online market really to be judged based on a highly selective survey of 129 products?

This is not a unique case. The use of “out-of-context” evidence has been a recurrent issue in the debate over product safety, as this author pointed out in Fighting Counterfeits or Counterfeiting Policy? A European Dilemma, a previous post on the Evidence Hub, a Lisbon Council project created to discuss and disseminate the evidence being used in policymaking. For starters, there should be a thick blanket of caution over the headline €121 billion counterfeit market estimate that the European Commission cites in the impact assessment of the digital services act. The €121 billion figure comes from Trends in Trade in Counterfeit and Pirated Goods, an European Union Intellectual Property Office (EUIPO) and Organisation for Economic Co-operation and Development (OECD) report, which took a much more careful approach to the data. It noted that most counterfeit goods seized at the border are watches, clothing and bags, which are brand-sensitive items where the original is worth much more than the cheaper knock off. But the market figure presented is calculated based on the value of the original good and not the estimated cost of the much cheaper counterfeit. This, as the EUIPO puts it bluntly, “may lead to an inflated estimated value” in the overall figure. But nuance like this often gets lost in the policy debate. It can lead not only to wrong conclusions; it can also lead to wrong actions as well.

And there are other examples. One recurrent policy argument is that counterfeit good are a major danger to consumers. To prove this, in a summary of the data compiled in the Counterfeit and Piracy Watch List, a European Commission-led project in which stakeholders are invited to report marketplaces where they suspect counterfeit goods are being sold, the European Commission reported that “97% of reported dangerous counterfeit goods were assessed as posing a serious risk to consumers.” But the data came from a different source – the Qualitative Study on Risks Posed by Counterfeits to Consumers, an EUIPO report. There, the EUIPO stated bluntly that the dataset used for the survey was too small to allow for statistically significant analysis and even labelled the study “qualitative” as a nod to the limited conclusions that should be drawn from a sample so small. But even there, the results told a different story than a superficial glance might reveal. Of the 15,459 dangerous products then identified in the EU Rapid Alert System – an online portal where countries can share information with other countries about dangerous goods they have identified – only 191 were counterfeit. In other words, only 1% of the total number of dangerous goods turned out to be fake – not the 97% you might have thought if you’d only ever seen the original statement presented out of context.

Most of these questions would be academic if policy were not due to be made on the basis of these statistics – and their out-of-context citation. To the contrary, rules are being drawn up – sometimes hastily with only limited impact assessments – that would require online platforms to take steps above and beyond the commitments already made in the Product Safety Pledge and the Memorandum of Understanding on the Sale of Counterfeit Goods on the Internet. Among the provisions in the DSA are expanded rules to require intermediaries to verify the accuracy of trader information and listed goods before agreeing to sell them (providing a “best” effort towards that end) and, according to the draft favoured by the European Parliament, to carry out random checks on goods offered for sale to confirm that they are not illegal. As Victoria de Posson, senior manager for public policy at the Computer and Communications Industry Association (CCIA), notes: “While the public debate has centered around a few well-known companies, the truth is that lawmakers will be imposing new obligations on tens of thousands of companies in Europe, most of which are small businesses.”

Behind these efforts is an unspoken implication that e-commerce is somehow contributing to a rise in the sale of counterfeit and illegal goods. But despite the continuous rise in e-commerce over the years, the amount of counterfeit goods in EU trade is falling, according to Global Trade in Fakes: A Worrying Threat, an OECD/EUIPO report. This remarkable trend coincides with several important developments: border agents and shipping companies are working better together to crack down on the brand knock-off trade and better police the shipping container industry, which accounts for 81% of counterfeit goods trade; and the onset of self-reporting procedures and marketplace-based crackdowns has brought needed transparency and accountability to a process which had few viable controls up until recently.

But the debate over what to do now seems to be taking place in an alternate universe, a place where the attitude one demonstrates to platform behaviour is more important than the reality that platform behaviour might be begetting. The tone has become more of a Sergio Leone western than a policy discussion, as one recent tweet suggested.

Regardless of where the DSA lands – and it seems unlikely at this point that the governing bodies of Europe will re-examine the statistical basis on which they have drawn some rather wide-ranging conclusions – we should all reflect on how the European debate on technology can be brought more firmly into the realm of evidence-based policymaking and why this move – so obvious on the surface – is so difficult to realise in practice. For starters, this discussion should serve as a gentle reminder of a frequently overlooked point: the problem of misinformation is much more complex than we perceive. The same logical fallacies we consider misinformation of the worst kind can still find a place in official policymaking – without even raising an eyebrow.

And there are better ways to handle evidence than the intense, deeply politicised cherry-picking that has gone into the illegal-content proposals. “Handling complex scientific issues in government is never easy – especially during a crisis when uncertainty is high, stakes are huge and information is changing fast,” writes Geoff Mulgan, professor of collective intelligence, public policy and social innovation, in “COVID’s Lessons for Governments? Don’t Cherry-Pick Advice, Synthesize It,” a recent article in Nature, the science journal. “There’s a striking imbalance between the scientific advice available and the capacity to make sense of it,” he adds, noting “the worst governments rely on intuition.”

Researchers have a way of avoiding problems like the ones detailed in this post – it is called “evidence synthesis,” which is “the process of bringing together information from a range of sources and disciplines to inform debates and decisions on specific issues,” according to one definition. It is also a way of making sure that the facts we use for policymaking reflect real market conditions and not the hand-picked realities that special interests would like us to see. It is in everyone’s interest to make sure the evidence is balanced and the standards are robust, sustained and proportionate. It makes for better policymaking – and for better lives as well.

David Osimo is director of research at the Lisbon Council

More Analysis

A Critique of Pure Friction: Does More Hassle Mean Additional Safety and Better Regulation?

Fighting Counterfeits or Counterfeiting Policy? A European Dilemma

Donald Trump, Sedition and Social Media: Will the Ban Stop the Rot?

Country of Origin: New Rules, New Requirements

Disinformation and COVID-19: Two Steps Forward, One Step Back

Illegal Content: Safe Harbours, Safe Families

Regulation and Consumer Behaviour: Lessons from HADOPI

Incitement to Terrorism:Are Tougher Measures Needed?

Creative Works, Copyright and Innovation: What the Evidence Tells Us